LiDAR mapping uses laser pulses to create detailed 3D models of your home, allowing your vacuum to understand its surroundings precisely. SLAM combines this data with real-time sensor input to help your robot determine its exact location while building and updating the map. Together, these technologies enable your vacuum to navigate efficiently, avoid obstacles, and adapt to environmental changes. Keep exploring to discover more about how LiDAR and SLAM power these smart cleaning systems.

Key Takeaways

- LiDAR mapping uses laser pulses to create detailed 3D models of environments for robotic vacuums.

- SLAM algorithms combine sensor data to simultaneously localize the robot and build real-time maps.

- LiDAR provides accurate obstacle detection, enabling efficient navigation and environment understanding.

- Sensor integration enhances SLAM performance by incorporating data from bump sensors, infrared, or cameras.

- Advanced navigation systems improve cleaning efficiency, adaptability, and obstacle avoidance in complex home layouts.

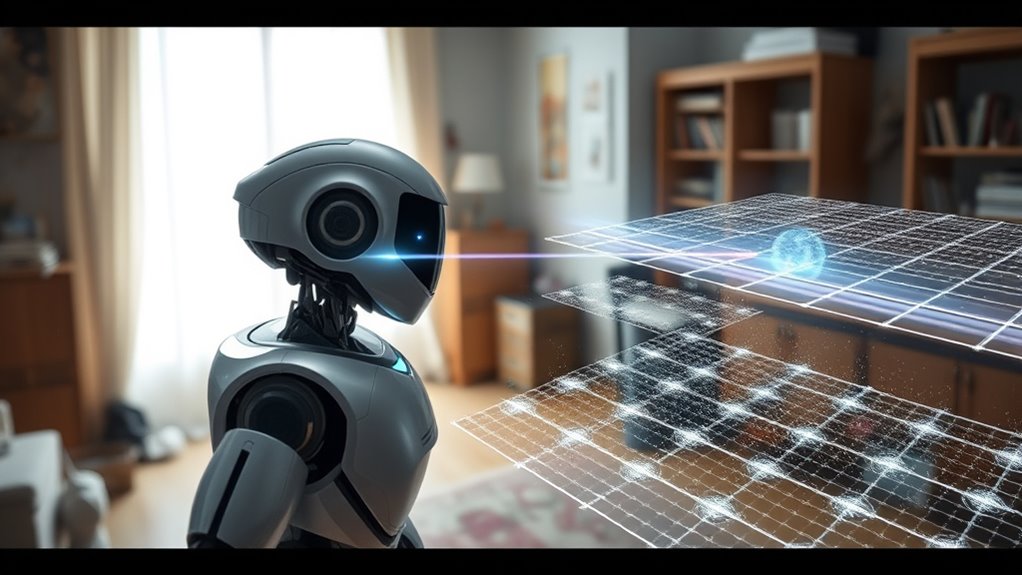

If you’re exploring how modern robotic vacuums navigate your home, understanding LiDAR mapping and SLAM technology is crucial. These advanced systems are what allow your robot to efficiently clean and move around without getting lost or missing spots. At the core of this process is robot navigation, which combines sensor integration and sophisticated algorithms to create a real-time map of your environment. When your vacuum uses LiDAR—Light Detection and Ranging—it emits laser pulses that bounce off walls, furniture, and other obstacles, helping it measure distances precisely. This sensor data feeds into SLAM, or Simultaneous Localization and Mapping, enabling the robot to build a detailed map while tracking its position within that map at the same time.

Sensor integration is key here. Your vacuum’s LiDAR sensor works in tandem with other sensors, such as bump sensors, infrared, or cameras, to gather thorough data about your home’s layout. This multi-sensor approach ensures that the robot can detect obstacles, avoid falls down stairs, and adapt to changes in the environment. By combining data from these sensors, the robot creates a dynamic, accurate map that updates as it cleans, allowing for optimized routes and efficient coverage. This integration also helps the robot to remember where it has already cleaned, preventing unnecessary repetition and saving battery life.

SLAM algorithms process all this incoming sensor data in real time, constantly updating the map and determining the robot’s location. Unlike older models that relied on simple random navigation, modern robotic vacuums with SLAM can plan systematic cleaning paths, navigate around furniture, and return to their charging stations without trouble. This technology fundamentally grants your vacuum a sense of spatial awareness, similar to how humans understand their surroundings. With improved robot navigation, your vacuum can handle complex floor plans, narrow spaces, and cluttered rooms more effectively.

Understanding how LiDAR mapping and SLAM work together reveals why these vacuums are so smart. They don’t just bump into things and hope for the best—they actively analyze their environment, learn from it, and adapt. Sensor integration ensures that they gather all necessary information, while SLAM algorithms process this data to maintain an accurate, up-to-date map. As a result, your cleaning device becomes more autonomous, efficient, and reliable. So, the next time you see your robotic vacuum expertly weaving around furniture or seamlessly returning to its dock, you’ll know that sophisticated robot navigation, powered by LiDAR and SLAM, is behind its impressive performance.

Frequently Asked Questions

How Does Lidar Mapping Improve Robotic Navigation Accuracy?

Lidar mapping boosts your robotic navigation accuracy by providing precise environmental data. When you perform sensor calibration, you guarantee the lidar’s measurements are accurate, reducing errors. Data fusion combines lidar data with other sensors like cameras or IMUs, giving you an all-encompassing view of your surroundings. This integration helps your robot navigate more reliably, even in complex or dynamic environments, by enhancing perception and minimizing obstacles or localization mistakes.

What Are the Limitations of Current SLAM Techniques?

You’ll find current SLAM techniques limited by sensor fusion challenges and high computational complexity. Sensor fusion can struggle with integrating data accurately from multiple sensors, leading to errors. Meanwhile, the heavy processing demands slow real-time performance and increase energy consumption. These limitations can hinder the precision and efficiency of robotic navigation, especially in complex environments. Improving algorithms and hardware is essential to overcome these hurdles and enhance SLAM reliability.

How Does Environmental Lighting Affect Lidar Performance?

Imagine lighting as the sun’s glare on a mirror—harsh yet revealing. Environmental lighting can cast shadows on LiDAR, affecting its performance. Bright sunlight causes sensor calibration issues and introduces noise, reducing accuracy. To combat this, you need effective noise reduction techniques and proper calibration. While LiDAR works well in various conditions, unpredictable lighting can still challenge its ability to produce precise maps, so stay vigilant and adjust your sensor settings accordingly.

Can Lidar Be Integrated With Other Sensors for Better Mapping?

Yes, you can integrate LiDAR with other sensors for better mapping through sensor fusion. By combining data from cameras, IMUs, and ultrasonic sensors, you enhance accuracy and robustness. Data synchronization guarantees that all sensor inputs align in time, preventing inconsistencies. This integrated approach improves your mapping, especially in challenging environments, leading to more reliable and detailed results in your navigation or surveying projects.

What Future Advancements Are Expected in Lidar and SLAM Technology?

Imagine your world merging seamlessly like a symphony—future LiDAR and SLAM tech will harness AI integration and sensor fusion to create smarter, faster mapping. You’ll see enhanced accuracy, real-time updates, and better obstacle detection. As sensors become more sophisticated, expect autonomous vehicles and robots to navigate complex environments effortlessly, transforming everyday life. This evolution will turn raw data into intuitive insights, making your interaction with technology more natural and precise.

Conclusion

Now that you know how LiDAR mapping and SLAM technology work, you’re better equipped to understand smart devices and robotics. These tools are transforming the way you clean and navigate your home, making tasks easier than ever. Remember, knowledge is power, and staying informed means you’re always a step ahead. So don’t wait for the dust to settle—embrace the future now and keep up with these cutting-edge innovations.